Text

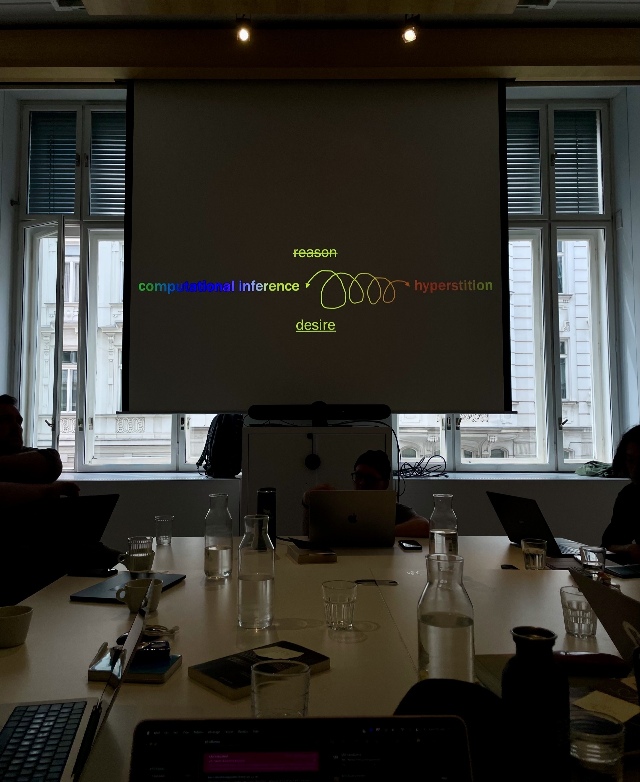

This workshop explores the concept of abductive inference in relation to machine learning, focusing on the distinction between weak and strong abduction. Building on earlier discussions of weak abduction—understood as the generation of conjectural models by current AI systems—we’ll consider what strong abduction might entail, particularly in light of debates around digital aesthetics, creativity, and politics. Central to our inquiry is the contested role of explanation in AI: while classical statistics emphasized interpretability, contemporary algorithmic approaches prioritize prediction and the construction of new, data-driven realities. This tension invites critical reflection on how different forms of inference (abduction vs. induction) shape epistemological, political, and artistic possibilities—and limitations—in computational culture.

Lecturer

Mikkel Rørbo, Luke Stark, Hong, Sun-ha, McGlotten, Shaka, Daniel Susser, Alex Quicho